Good morning. It’s Tuesday, June 24. Today we are covering:

OpenAI's first AI device with Jony Ive won't be a wearable

Salesforce unveils Agentforce 3, its smartest agent platform yet

Google's new robotics AI can run without the cloud and still tie your shoes

Waymo's robotaxis are now available on the Uber app in Atlanta

Google Wants to Get Better at Spotting Wildfires From Space

Let’s dive in

OpenAI's first AI device with Jony Ive won't be a wearable

By Alex Heath via The Verge

OpenAI and Jony Ive's first AI device, developed by the acquired io hardware team, won’t be a wearable or in-ear product, and isn’t expected to ship until 2026 at the earliest.

A trademark lawsuit from startup Iyo forced OpenAI to remove references to the io brand, revealing that OpenAI had prior knowledge of Iyo’s competing in-ear audio computer.

Court documents show that OpenAI and io explored a wide range of hardware prototypes, including earbuds and headphones, despite ultimately moving away from a wearable form factor.

𝕏: Sam Altman in a March email to an audio device startup that was asking him to personally invest in its round: “thanks but im working on something competitive so will respectfully pass!” - Alex Heath (@alexeheath)

Salesforce unveils Agentforce 3, its smartest agent platform yet

By Mike Moore via TechRadar

Salesforce has launched Agentforce 3, its most advanced AI agent platform to date, featuring a new Command Center for real-time observability, enhanced model performance insights, and natural language tools for generating instructions and case studies.

The platform now supports the Model Context Protocol (MCP), enabling seamless integration with over 100 third-party services, including AWS, Google Cloud, Stripe, IBM, and Notion, without the need for custom code.

Designed to redefine digital labor, Agentforce 3 combines agents, data, and metadata to deliver higher productivity, trust, and accountability, empowering businesses to create and monitor more autonomous, intelligent AI workflows.

𝕏: Agentforce 3.0 + MCP: Interoperable. Unstoppable.Connect Agents to any system, tool, or data source — securely, reliably, and at scale. Empower teams to build trustworthy Agents for the AI era. - Marc Benioff (@Benioff)

The best way to reach new readers is through word of mouth. If you click THIS LINK in your inbox, it’ll create an easy-to-send pre-written email you can just fire off to some friends.

Google's new robotics AI can run without the cloud and still tie your shoes

By Ryan Whitwam via Ars Technica

Google DeepMind has launched a new on-device VLA (vision-language-action) model for robots, enabling full autonomy without cloud dependence—robots can now perform tasks like tying shoes or folding shirts locally and quickly.

Developers can fine-tune the model for custom use cases via a provided SDK, needing only 50–100 demonstrations, and the system excels in generalization by drawing from Gemini’s multimodal AI.

Safety features aren't built in, so Google recommends pairing the model with the Gemini Live API and a low-level safety controller; ideal use cases include privacy-sensitive or connectivity-limited environments like healthcare.

Waymo's robotaxis are now available on the Uber app in Atlanta

By Andrew J. Hawkins via The Verge

Waymo’s robotaxis are now available in Atlanta through the Uber app, making it the second city after Austin to host the companies' autonomous ridehail partnership.

The service is limited to a 65-square-mile area in neighborhoods like Downtown, Buckhead, and Capitol View, and does not include highway or airport trips; riders must opt in via Uber’s Ride Preferences to increase their chances of getting a robotaxi.

Uber handles fleet operations including cleaning and charging via Avmo, while Waymo oversees testing and support; the companies share revenue but the exact split remains undisclosed.

Google Wants to Get Better at Spotting Wildfires From Space

By Boone Ashworth via WIRED

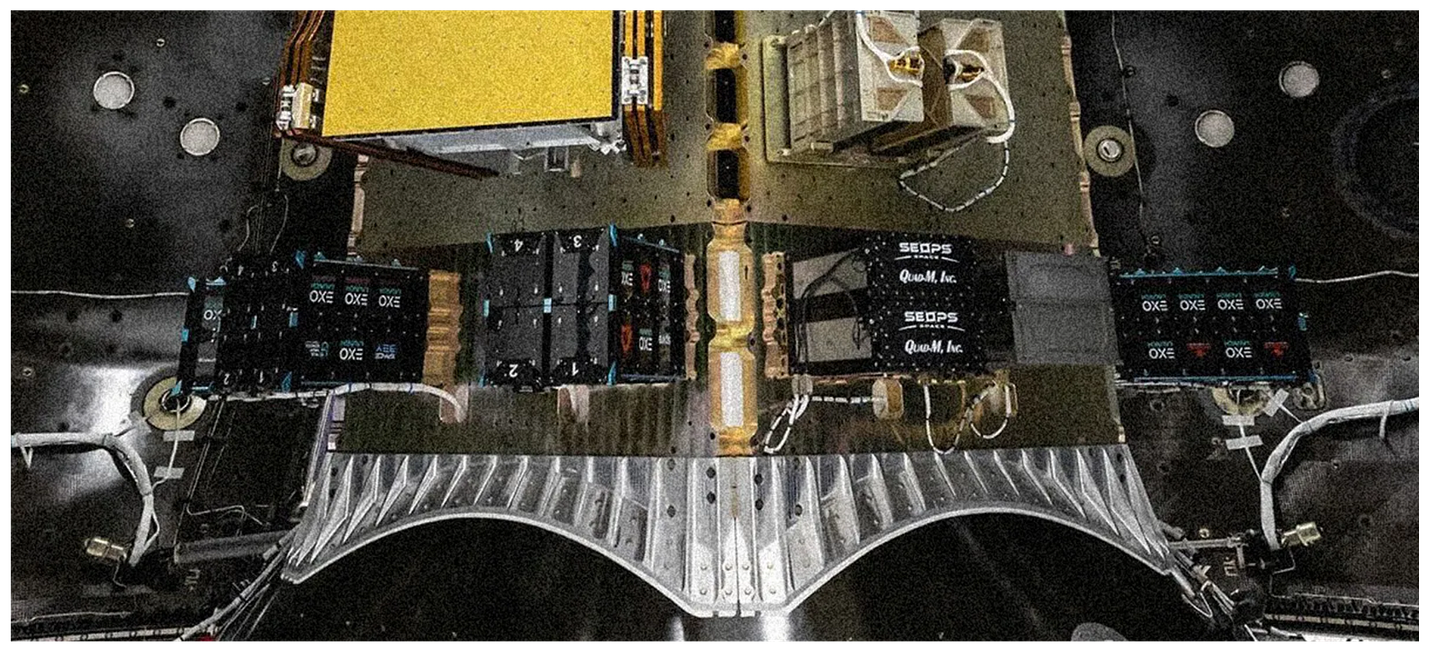

Google’s Fire Sat project, in partnership with Earth Fire Alliance and Muon Space, aims to launch 52 satellites by 2029 to detect wildfires in near real time using AI-enhanced imagery captured every 15 minutes.

Unlike current satellite systems, Fire Sat will combine high-resolution visible/infrared and cryo-cooled thermal imagery to reduce false positives and better identify early-stage fires, with the first satellite launched in March 2025 and three more slated for early 2026.

While the initiative may bolster wildfire response, experts caution that data delivery to first responders, reliance on private tech, and Google’s own environmental impact raise concerns about access, continuity, and accountability.

We're thrilled to bring you ad-free news. To keep it that way, we need your support. Your pledge helps us stay independent and deliver high-quality insights while exploring new ideas. What would you love to see next? Share your thoughts and help shape the future of Newslit Daily. Thank you for being part of this journey!

Trending in AI

Thanks for reading to the bottom and soaking in our Newslit Daily fueled with highlights for your morning.

I hope you found it interesting and, needless to say, if you have any questions or feedback let me know by hitting reply.

Take care and see you tomorrow!

How was today’s email?

Share this post